Solutions for Cost-Effective EKS Control Plane Logging

Authors: Matthew Hopkins (moebaca)

Leveraging fully managed services with cloud providers such as AWS no doubt have significant benefits when it comes to saving on management overhead. Being able to instantly spin up a highly available, low maintenance Kubernetes control plane is a boon when engineering hours are quite expensive in comparison. For example, one provisioned EKS cluster – $0.10/hour or ~$73/month ($.10 * 24 hours * 30.44 avg. days a month) – is very much likely cheaper than the cost it would take for an engineer or two to build and manage a similar control plane with all the niceties (ease of setting it up, scaling it and maintaining its availability, security, etc.). However, giving up flexibility is by far one of the biggest tradeoffs when adopting a fully managed solution.

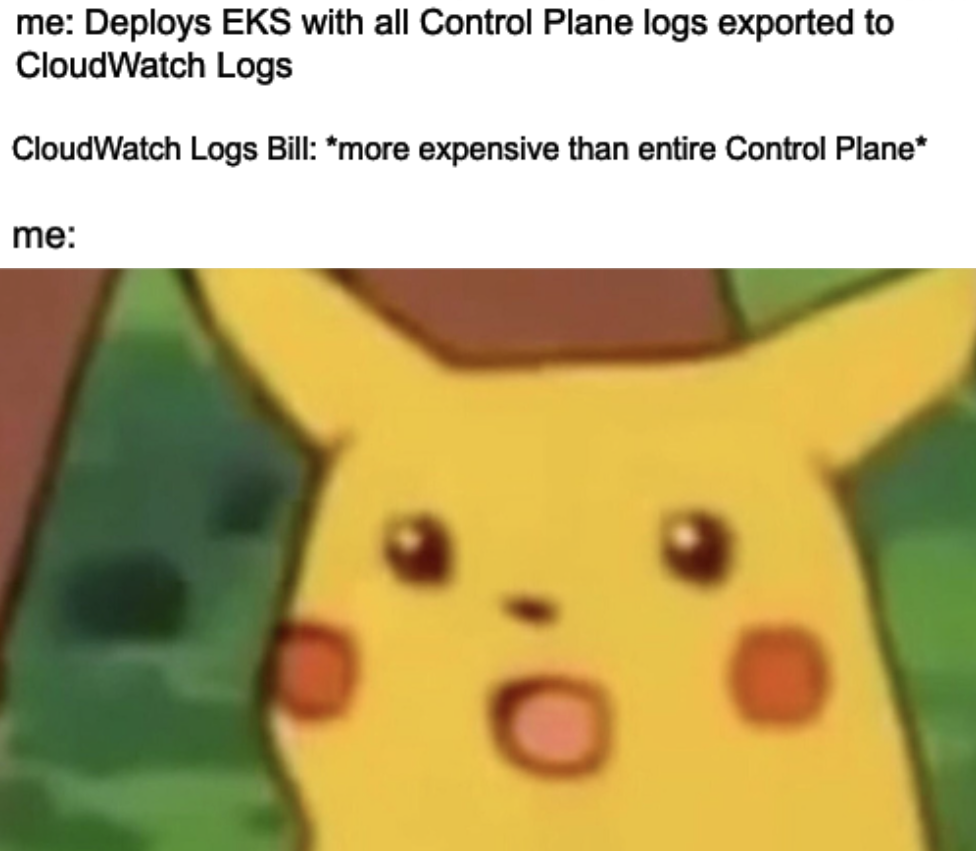

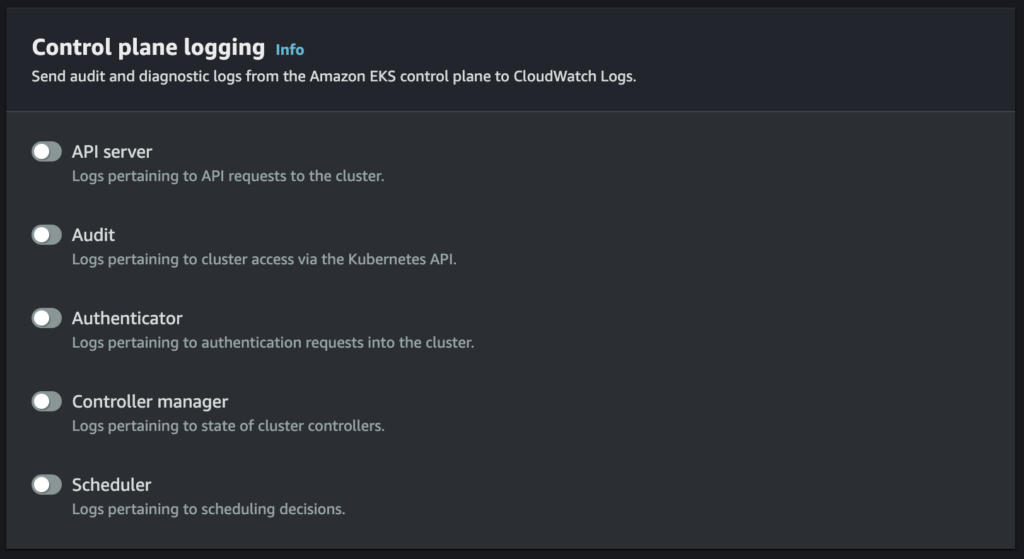

In the case of EKS, the inflexibility of control plane log shipping can be a source of frustration for engineers who yearn for the most cost efficient configuration possible. EKS offers no more than a simple “On” or “Off” switch for the 5 primary log types (API server, Audit, Authenticator, Controller manager, Scheduler). Without any further mechanism to filter or even redirect logs to anywhere other than CloudWatch Logs, we as customers have very little room to optimize on log ingest fees – especially for security critical logs like “Audit” which can be quite detailed directly translating to higher ingest fees. Due to this the CloudWatch Logs bill can quickly become more expensive than the Control Plane fees!

This article briefly details the solutions we researched and implemented at Autify on our quest to cut down CloudWatch Logs fees associated with EKS Control Plane logs positioning us to save ~$2,000-$3,000/year on our AWS bill.

Solution 1 – CloudWatch Logs Infrequent Access

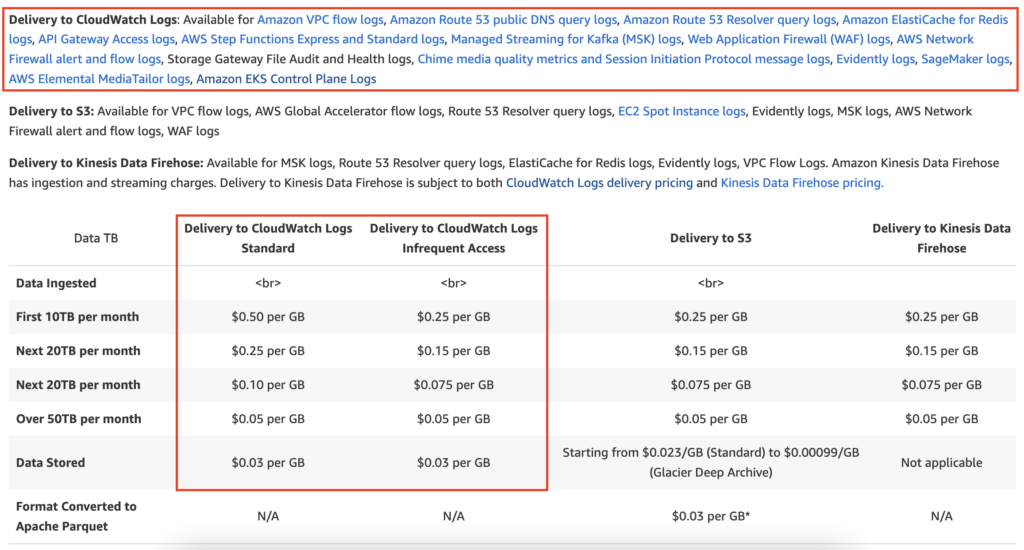

Just in case you missed it back around re:Invent 2023, the CloudWatch team announced a new log class called Infrequent Access. This features biggest (only?) perk is that it offers cheaper log ingest to the tune of 50% off per GB (AWS must be hearing our cries!). Log storage and everything else remains the same price:

🗣 Note: EKS Control Plane Logs are considered Vended Logs and are not the only managed service logs that can benefit from this solution! As the screenshot above details – services such as VPC flow logs, API Gateway Access logs, WAF logs and more can all benefit from this solution!

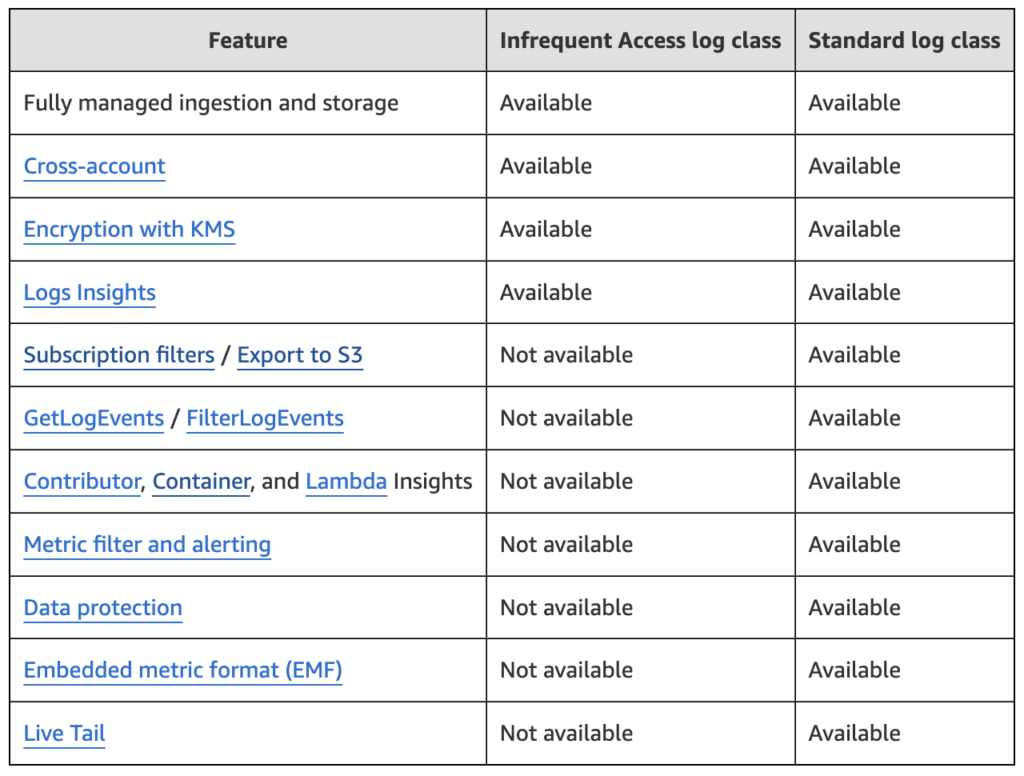

This price reduction does not come without tradeoffs and the below needs to be considered thoroughly before adoption.

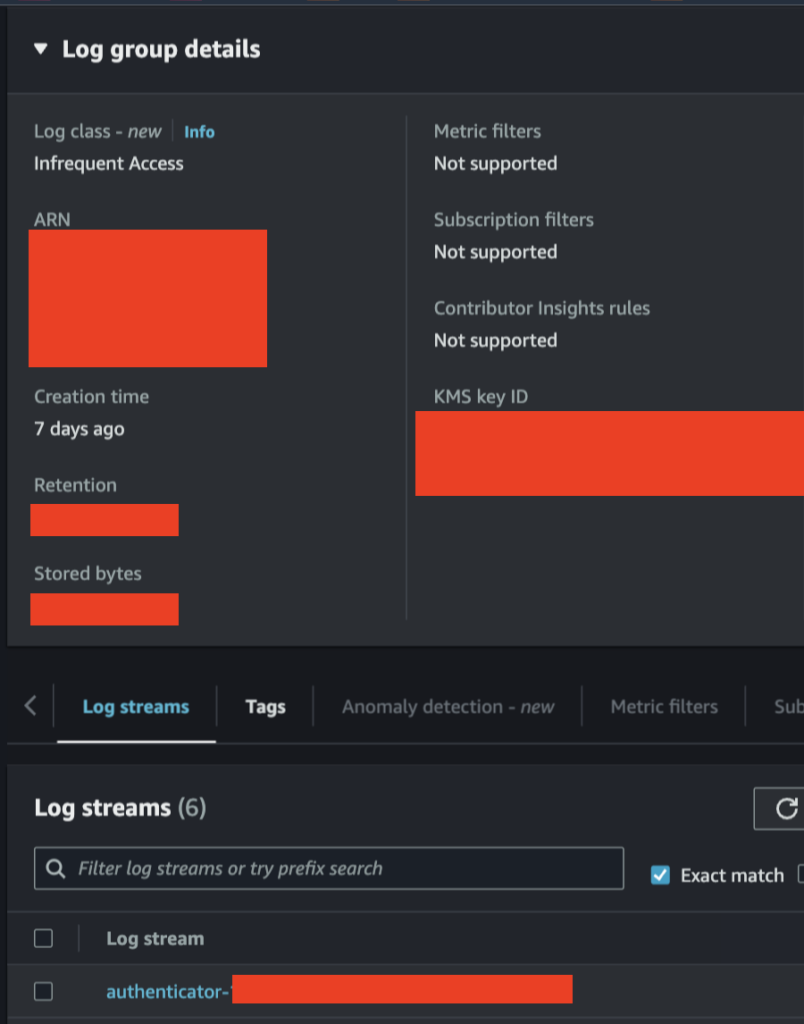

For certain EKS clusters at Autify it was deemed that tradeoffs such as Live Tail, Metric filter/alerting, and Export to S3 were worth foregoing in order to save hundreds of $$ per month on ingest fees. Logs are still fully queryable via CloudWatch Logs Insights along with log encryption solving our primary concerns. Everything else can be replaced via other mechanisms.

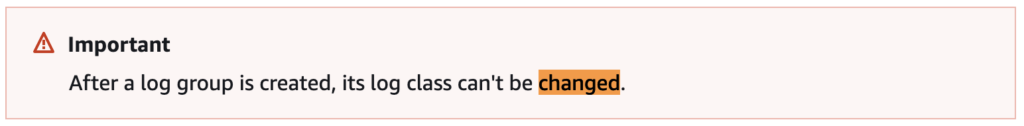

One note of great importance! Your existing cluster log group cannot be changed to Infrequent Access from Standard. More information on that topic will be available in the Implementation section below.

Autify Realized Cost Savings

Once we switched our production EKS clusters to emit logs to the Infrequent Access class we immediately began to see the advertised 50% reduction in CloudWatch Logs log ingest fees.

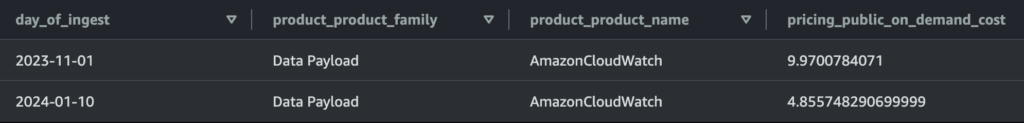

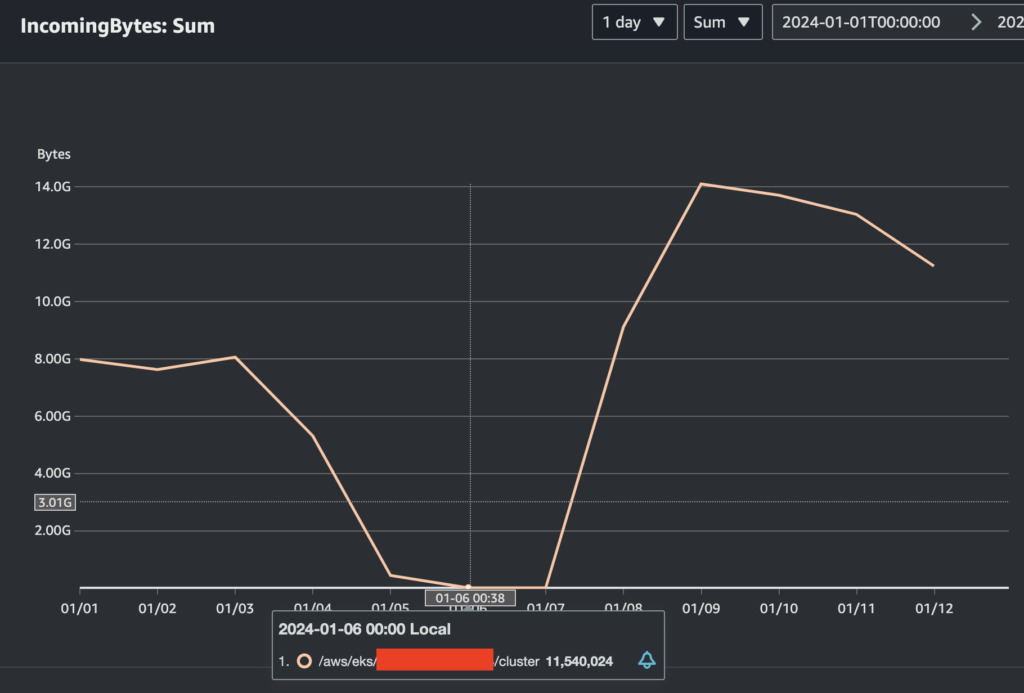

Below is actual data from one of our busiest EKS production clusters. With the Standard class for CloudWatch Logs we were paying ~$10/day or ~$3650/year ($10 per day * 30.44 avg. days in month * 12 months in year) in CloudWatch Logs fees:

With this single log group now configured for Infrequent Access we are now stand to realize the following savings on our most active cluster:

Standard ($9.97/day * 30.44/avg. days a month * 12 months a year) – Infrequent Access ($4.86/day * 30.44 avg. days a month * 12 months a year) =

+$1,867/year saved on log ingest on this single log group

You can reproduce the above query for yourself by querying Cost and Usage Reports using Amazon Athena and running the following query we wrote at Autify:

🗣 Modify the following:

FROM statement to point to your<YOUR COST AND USAGE REPORT>database/table

WHERE statement line_item_resource_id for<YOUR CLUSTER NAME>

WHERE statement TIMESTAMP for your specific<DATE 1>and<DATE 2>(or change it to be a range using AND, <=, >= )

SELECT CAST(DATE_TRUNC('day', "line_item_usage_start_date") AS DATE) AS day_of_ingest,

"product_product_family", "product_product_name",

SUM("pricing_public_on_demand_cost") AS pricing_public_on_demand_cost

FROM <YOUR COST AND USAGE REPORT>

WHERE (

DATE_TRUNC('day', "line_item_usage_start_date") = TIMESTAMP '<DATE 1>' OR

DATE_TRUNC('day', "line_item_usage_start_date") = TIMESTAMP '<DATE 2>'

)

AND "product_product_name" = 'AmazonCloudWatch'

AND "product_product_family" = 'Data Payload'

AND "line_item_resource_id" like '%/aws/eks/<YOUR CLUSTER NAME>/cluster'

GROUP BY DATE_TRUNC('day', "line_item_usage_start_date"), "line_item_resource_id",

"product_region", "product_product_family", "product_product_name"

ORDER BY "pricing_public_on_demand_cost" DESCExample Implementation

AWS Console

If you already have an active cluster with an active EKS cluster log group please follow these instructions:

- Turn “Off” EKS Control Plane logging for all logs for your cluster.

- Export the logs to S3 for the cluster’s Control Plane log group(beware! there is a cost associated with exporting to S3 from CloudWatch).

- Note down the log group name and then delete it (this cannot be reversed – make sure you have fully thought this through!).

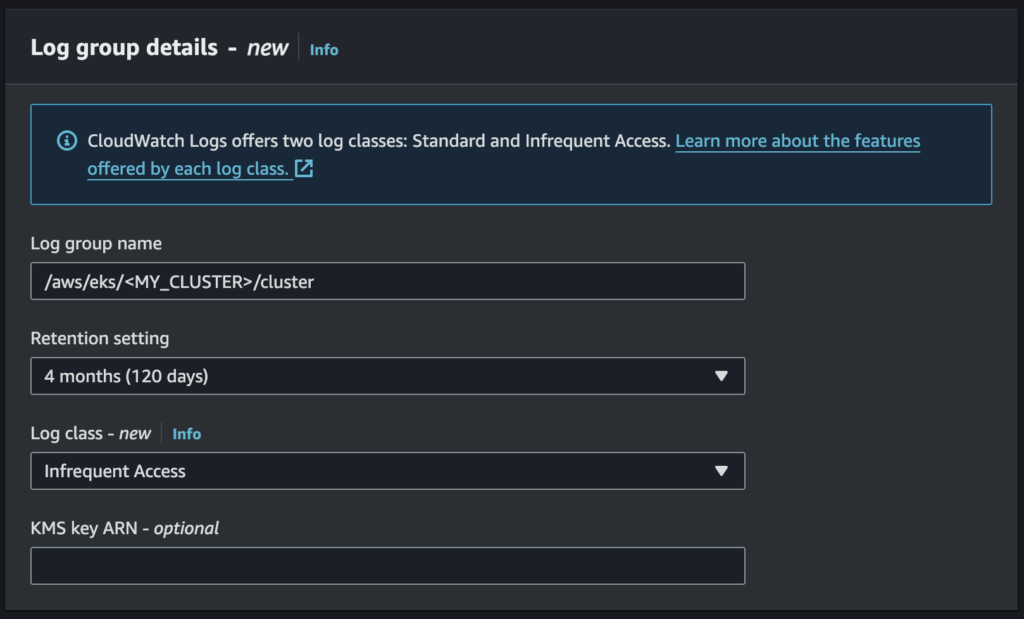

- Recreate the log group with the same name (typically something like

/aws/eks/<YOUR CLUSTER NAME>/cluster) and select Infrequent Access for log class.

- Turn back “On” each of the logs you’d like delivered to the newly created log group.

Now check your log group to ensure logs are being delivered that that the log class is properly configured:

Infrastructure as Code

At Autify we prefer to use Terraform for all production infrastructure. The EKS Module is a project we leverage quite extensively. Regrettably, in the module’s current release (Version 19) Infrequent Access log class configuration is not supported. We have created a PR for the feature request and it is slated to be added to the next major release Version 20.

For now, you can easily work around this by managing the log group outside of the module (note – the same caveats apply as mentioned in the previous section regarding the log group being required to not exist before running the below code):

locals {

cluster_name = "YOUR_CLUSTER_NAME" # Replace with your actual cluster name

vpc_id = "YOUR_VPC_ID" # Replace with your VPC ID

subnet_ids = ["YOUR_SUBNET_ID"] # Replace with your Subnet IDs

}

resource "aws_cloudwatch_log_group" "cloudwatch_log_group_cluster" {

name = "/aws/eks/${local.cluster_name}/cluster"

log_group_class = "INFREQUENT_ACCESS"

skip_destroy = true

}

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 19.0"

cluster_name = local.cluster_name

cluster_version = "1.28"

# Here we are disabling the creation of the log group since we already created it in the aws_cloudwatch_log_group.cloudwatch_log_group_cluster resource

create_cloudwatch_log_group = false

cluster_enabled_log_types = [

"api",

"audit",

"authenticator",

"controllerManager",

"scheduler"

]

vpc_id = local.vpc_id

subnet_ids = local.subnet_ids

eks_managed_node_groups = {

example = {

min_size = 1

max_size = 1

instance_types = ["t3.small"]

capacity_type ="SPOT"

}

}

# Explicitly declare a dependency on the CloudWatch Log Group

depends_on = [

aws_cloudwatch_log_group.cloudwatch_log_group_cluster

]

}This basic example serves to create a functional EKS cluster that starts pushing logs to the EKS cluster log group created outside of the EKS module while manually managing the Infrequent Access cluster log group. Be sure to tear down the example when finished and reuse the concepts to fit for your current setup.

Solution 2 – External Audit Log Shipping into S3 (kube-audit-rest)

Details

Shipping EKS Control Plane vended logs directly into S3 (without exporting from CloudWatch Logs) is currently unsupported. There is a PR open in the Containers Roadmap but it has no real indication of progress. Shipping vended logs into Amazon S3 is not any cheaper than CloudWatch Logs Infrequent Access for other services, so once added it will have a dramatic price markup at $.38/GB (here in Tokyo) vs. ingest into S3 by yourself which we thoroughly detailed in Autify’s previous post: Leveraging Amazon S3 with Athena for Cost Effective Log Management (spoilers – it’s practically free!).

For now though there is another potential solution to consider. “Audit” logs make up the lion’s share of ingest cost vs. other security critical logs like “API server” and “Authenticator”. To illustrate this, take a look at what happens with the same production cluster mentioned earlier when turning off “Audit” logs. EKS cluster log ingest drops to mere Megabytes/day vs. 8+ GB/day:

kube-audit-rest is a project created with the aim to solve this exact problem:

Use kube-audit-rest to capture all mutation/creation API calls to disk, before exporting those to your logging infrastructure. This should be much cheaper than the Cloud Service Provider managed offerings which charges ~ per API call and don’t support ingestion filtering.

We tested this project at Autify and it works fairly well. At its core kube-audit-rest uses a ValidatingWebhookConfiguration in order to observe all API activity within the cluster and allows you to easily ship those records to a destination of your choosing (e.g. Amazon S3).

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration # Can also be a MutatingWebhookConfiguration if required

metadata:

name: kube-audit-rest

labels:

app: kube-audit-rest

webhooks:

-name: kube-audit-rest.kube-audit-rest.svc.cluster.local

failurePolicy: Ignore # Don't block requests if auditing fails

timeoutSeconds: 1 # To prevent excessively slowing everything

sideEffects: None

clientConfig:

service:

name: kube-audit-rest

namespace: kube-audit-rest

path: "/log-request"

caBundle: "$CABUNDLEB64" # To be replaced

rules: # To be reduced as needed

-operations: [ "*" ]

apiGroups: ["*"]

apiVersions: ["*"]

resources: ["*/*"]

scope: "*"

admissionReviewVersions: ["v1"]Trade-offs

This solution is all about trade-offs. I’ll list them out as we saw them here at Autify.

Pros

- The solution can be deployed into your existing EKS cluster and hooked up to S3 for essentially free k8s Audit log ingest

- A distroless version is offered which makes the resource requirements minimal and the security posture stronger than running a full OS

- It captures all cluster object creation and mutation events

Cons

Unfortunately the large amount of caveats to the solution warrants its own section in the README. The biggest cons for us at Autify were:

- A higher degree of management overhead introduced compared to the simplicity of Solution 1

- Security concerns running a pod that listens to all cluster activity

- Read Only API calls are not captured

Decisions, Decisions

While the cost savings using kube-audit-rest are extremely tempting, in the end we decided the cons were too much to approve for our EKS clusters. This may not be the case for your team so please read through the project carefully as the potential cost savings are quite nice if it fits your requirements.

Solution 3 – The “Off” Button

This solution may seem obvious but switching certain EKS Control Plane logs off is worth pointing out for the sake of being thorough. This is another big “It depends on your requirements” and turning off any of the 5 log types should not be taken lightly as they can each provide contextual information that may someday be crucial in a comprehensive security analysis.

A situation where it might be more acceptable to turn off certain EKS Control Plane logs might be:

- Minimal Use Cases: For development or testing environments where detailed monitoring and logging are not required.

It really can’t be recommended to turn off EKS Control Plane logs (especially Audit, API server and Authenticator logs) as they are absolutely considered a security best practice.

From the EKS best practices guide:

When you enable control plane logging, you will incur costs for storing the logs in CloudWatch. This raises a broader issue about the ongoing cost of security. Ultimately you will have to weigh those costs against the cost of a security breach, e.g. financial loss, damage to your reputation, etc.

Summary

In this blog post, we explored cost-optimization solutions for managing EKS Control Plane logs.

- Solution 1 utilizes the new CloudWatch Logs Infrequent Access log class offering a strong balance between functionality and cost.

- Solution 2 makes use of the open source project kube-audit-rest and is extremely cost effective and flexible – assuming your team’s requirements allow for the various trade-offs of adoption.

- Solution 3 involves deactivating certain Kubernetes logs such as “Controller Manager” and “Scheduler” and is really only fit for lower-tier environments (dev/test) assuming your team’s security requirements are satisfied.

At the end of the day, the choice of solution (or mix of solutions) depends on each organization’s unique balance of cost, security, and operational complexity requirements.