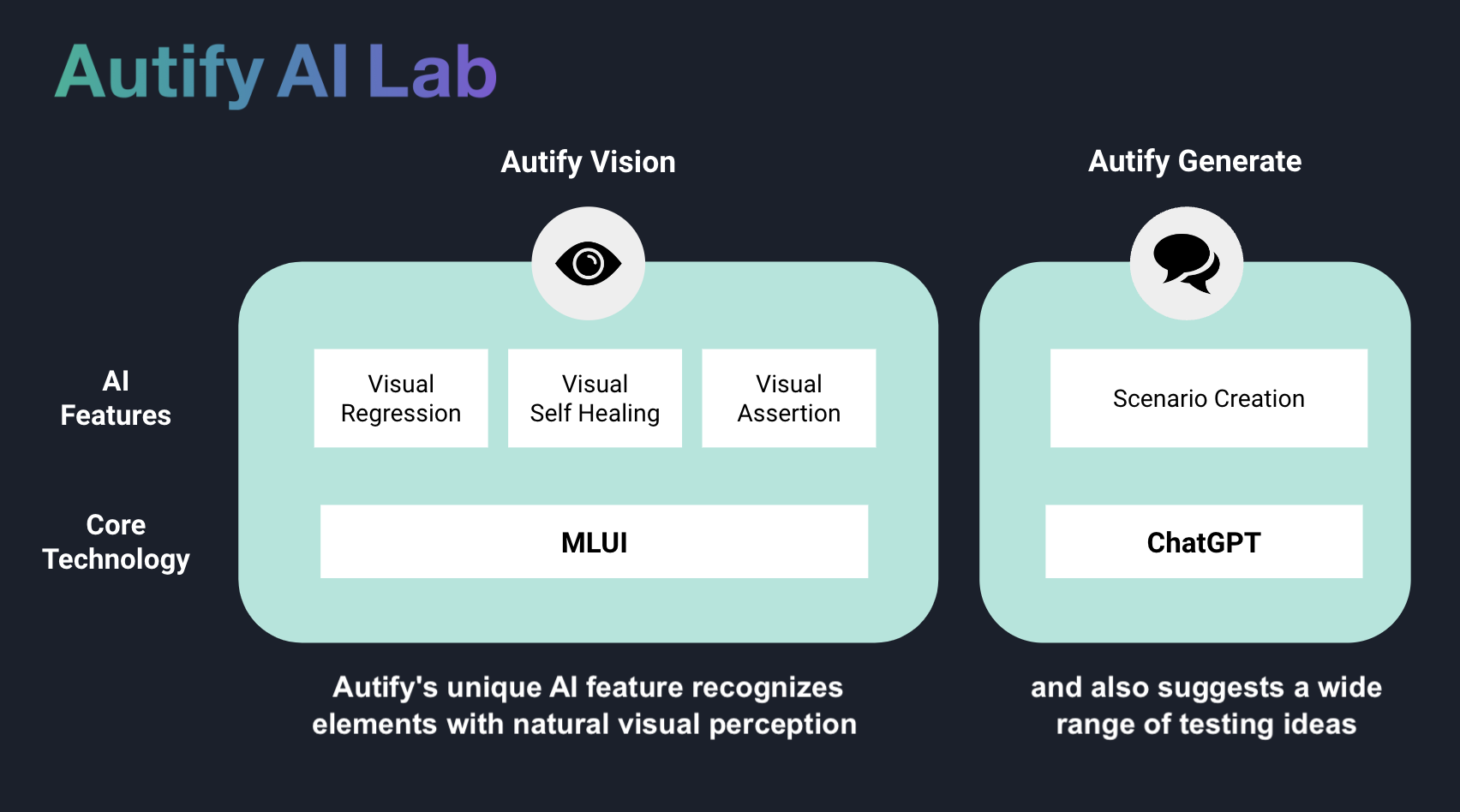

The development of machine learning technology is accelerating. LLM, also known as generative AI, examples of which include Stable Diffusion and ChatGPT, is now utilized in many fields. It’s being integrated into various products and is assisting individuals with their work. New, unique models and algorithms are also being introduced all the time.

Autify, which offers an AI-powered test automation platform of the same name, has invested heavily in machine learning since the launch of our service. This is not only to enhance Autify as a product but also because we firmly believe that machine learning is crucial to achieving Autify’s corporate mission: enhance people’s creativity by technology.

At Autify, we use ML in both our services: Autify for Web and Autify for Mobile. ML is utilized differently on both platforms because of their distinct characteristics. While Autify for Web uses ML in a complementary manner, Autify for Mobile relies on ML so heavily that the service cannot function without it.

How Autify for Web began as an AI service

Since the launch of Autify for Web, AI has been used to locate elements, i.e., to search a test page and find the element on which a specific action should be performed. By using various methods to extract the target element’s characteristics from HTML, the AI can identify the same element even if the HTML changes.

Although this element search is almost a rule-based process, combining several pieces of data enables it to locate elements with a reasonable degree of accuracy.

ML in Autify for Web

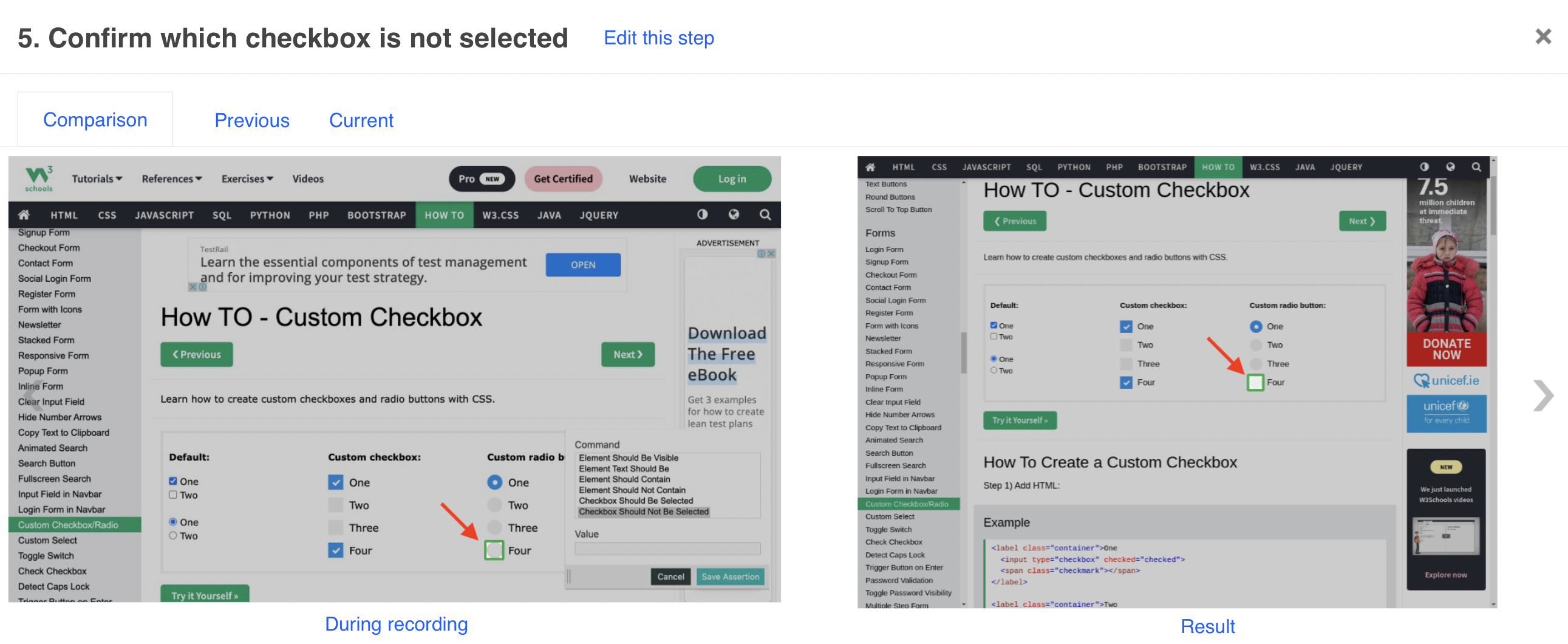

Assertion against checkboxes and radio buttons

Checkboxes and radio buttons in web applications are basic UI elements that allow users to turn something on or off. However, despite their simplicity, they can be surprisingly complex. They come in various designs and different implementations, some using the standard input tag while others use CSS tags like div and span.

Sometimes, it’s hard to discern whether a checkbox is on or off, even for a human if they’ve never seen it before. This is even more true with conventional record-and-playback testing. Specifically, users couldn’t determine the state of checkboxes using Autify’s standard features, so they had to work around this by using JS Steps to get the value set on the element and determine whether the checkbox was checked or not.

To solve this problem, we made it so that a machine-learning model could determine the status of the checkbox based on its appearance.

This feature enables users to check a wider range of checkboxes and radio buttons with different appearances, no matter how they are implemented internally.

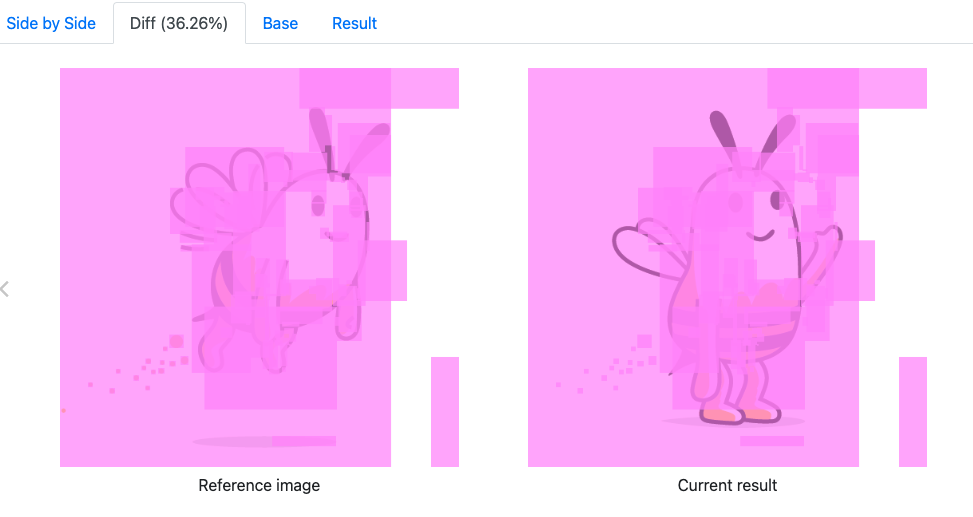

Visual regression testing

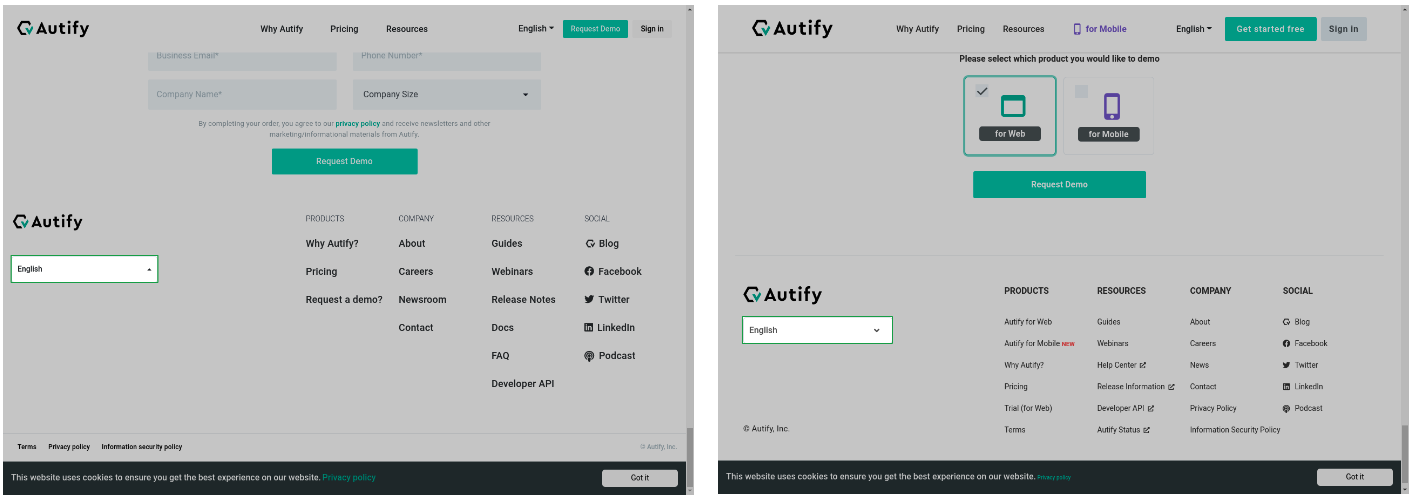

In 2022, we released the Visual Regression testing feature, which detects differences by comparing screenshots from past and present test executions.

This feature compares screenshots pixel by pixel while also allowing for some scrolling and screen size changes. Some of its intended uses include detecting broken designs due to CSS not being applied and unintentional changes in element placement, for example.

In 2023, we released a feature that enables users to perform visual regression testing by adding an assertion step that checks the appearance of elements. Rather than comparing the entire screen, this feature allows you to focus on finding differences in a specific element.

For now, these are more basic image processing features rather than machine learning. However, we believe that machine learning can be applied to visual regression testing in many ways, and many visual information processing models are emerging, so we will continue our research in this area.

Looking ahead

The use of machine learning in Autify for Web is still quite limited. However, we believe that machine learning has a vast range of applications in web app testing.

We are exploring ways to use machine learning to improve the test execution and recording experience, or even to create disruptive innovations that simplify the entire testing process.

ML in Autify for Mobile

As mentioned above, Autify for Web uses HTML information to extract the elements necessary for running tests. If the test target is a web application, we can use HTML, where the page information is well structured.

When it comes to mobile application testing, there is no complete, structured information like HTML. For this reason, when Autify for Mobile was first launched, it used APIs to identify elements from the page source.

However, test runs were negatively impacted due to insufficient information and poor performance, so we released a feature that processes visual information using our proprietary machine-learning model and performs actions such as finding elements. This is what Autify for Mobile primarily uses today.

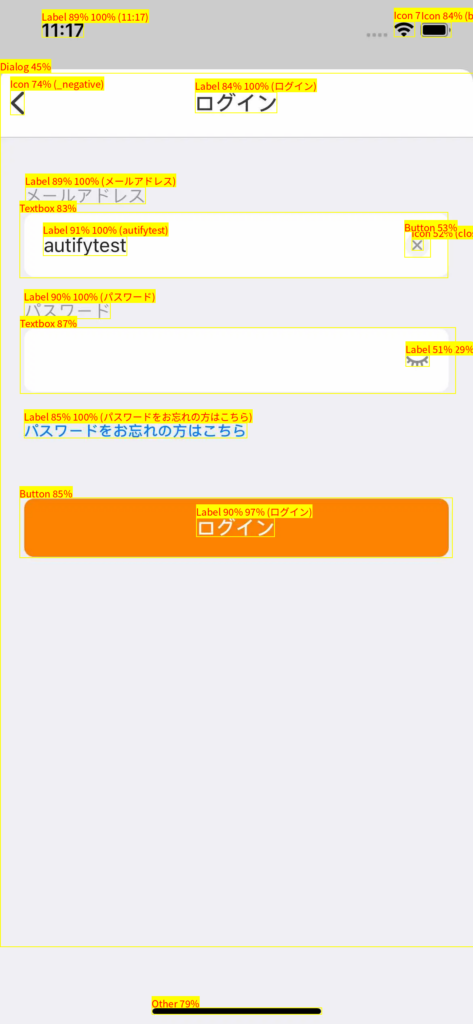

Since this is a machine learning model that extracts elements from visual information in UI screenshots, we internally refer to it as the MLUI model.

Recording

With Autify for Mobile, users record test scenarios using emulators on our server. When doing so, the MLUI model determines which element the user tapped based on visual information. This information is used during test runs.

Test execution

When a test is executed, the model looks at the screenshot at each step and determines which element should be tapped using the same mechanism as when the test was recorded, and the test execution engine taps the element.

If the model finds the exact same element as when the test was recorded, the test will run without encountering issues. However, it’s possible that the mobile application under test has been modified, and some elements may be different, such as when a button is moved.

Even in these cases, the MLUI model will still provide information based on various information about the element so that the execution engine can tap the element that’s most likely the same element (the test will fail if the appearance changes significantly or if the element that should be tapped has disappeared). The mechanism that automatically detects changes in elements and executes tests is called self-healing.

Improving the model

We are constantly improving the model, and its element extraction and self-healing capabilities have come a long way since its launch. However, not all elements are covered yet, and we train our models based on customer feedback. While the process from annotating training data to training the model is largely automated, the trained model is deployed into production manually.

This is because although training can improve some areas, it can cause test results to change in other areas. Since it’s important that software tests remain consistent no matter how many times they are run, we need to ensure that updating the model on our end doesn’t cause test results to change; test results shouldn’t change if the customer hasn’t changed the scenario or the application under test.

This is why we perform regression testing before we deploy the trained model to the production environment, not only to confirm that tests are now performing as expected, but also to ensure that the results of tests run in the past don’t change. The regression test is not yet perfect, and there have been cases where model updates have caused the test results to change. We are making rapid improvements to address this.

Looking ahead

Machine learning plays such an important role in Autify for Mobile that the platform wouldn’t work without it. As discussed in the Autify for Web section, while it’s important to develop new features using machine learning, it’s also vital to build a system that allows us to efficiently improve the current model without causing regression. We plan to continue development balancing these two factors.

Conclusion

Autify is actively using machine learning to automate web application and native mobile application testing, and we plan to release more features, large and small. Watch this space!

Start improving your development process with test automation. Sign up for a 14-day free trial or talk to our experts to learn more!