Once we get to know which will be the best supported browsers for our website/app, we can then proceed to ideate a test strategy.

Strategizing Our Cross-Browser Tests

At first, the thought of performing such a task as testing a website or web application for different web browsers may strike us as being somewhat overwhelming, but, far from it, what it really requires is only a careful, thorough plan which will lead us into a well strategized testing that will hopefully spare us from undesired problems. Needless to say, when we’re embarked on a large project, we will need to perform testing with such regularity that we can assure us new features will work for our target audience and old features remain functionally stable and don’t break when new additions are made to the code.

Dynamics is key. Running our tests along the development process will be a much better business than if we leave that task for the end. We will not only save lots of precious time (and money) but we’ll also be able to find and fix bugs earlier.

We can ideate a workflow for testing and bug fixing which we can break down into a certain set of phases. For example:

1) Initial Planning > 2) Development > 3) Testing/Discovery > 4) Bug fixes/Iteration

We’ll want to reiterate steps 2 to 4 the necessary times it takes to get all of the implementations on track. Let’s take a look at these phases more in detail:

The first thing is to figure out what the look and feel of our website will be; that is, the content it will have and the functionality at an UI level, etc. Assessing the available time for our deadline will be highly important in this phase, as well as determining the set of functionalities which will feature and the technologies which will be implemented.

Divide and conquer, says the motto, so an intelligent idea to follow once we get to this phase, is to divide our website or web app into modules, i.e.: home page, catalog, shopping cart, payment flow, etc, and the respective subdivisions which any of those components may imply.

The whole of functionalities will desirably be working in all target browsers. We may need to implement different code paths to render certain functionalities in different browsers in order to achieve the widest support scope possible, however, there is a point in which we need to, either accept that certain functionalities will not work the same way on all browsers, and provide reasonable solutions for browsers that don’t support the full functionality, or, accept the fact that your website/web app is simply not going to work in certain (older? less popular?) browsers. These are generally acceptable resolutions, given the user base conforms to them.

In this phase we focus on new functionalities. First, we must check there are no blocking issues in our code which may impede a certain feature to work.

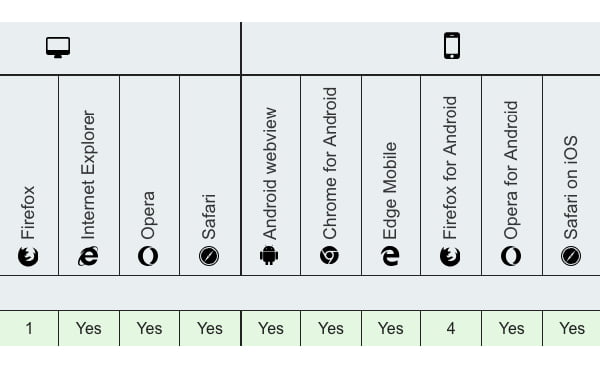

We can begin by doing testing in some stable, popular browsers, i.e.: Firefox, Safari, Chrome, IE, etc. Then we can move on and perform a few lo-fi accessibility tests, like browsing without the assistance of a mouse, or using a screen reader to assess the browsability for visually impaired people. We would of course like to test also on mobile platforms like Android or iOS.

Testing should encompass the most popular desktop OS platforms (Windows, macOS and Linux distributions like Ubuntu or Red Hat) for ALL browsers, popular or not. Then we’ll want to enlarge the list of browsers. We may include –not so popular– browsers such as Opera, Vivaldi, Konqueror, etc. We’ll try to test on real, physical devices wherever possible. Emulators and/or virtual machines will come handy when we happen to lack the means to perform testing with all the desired or required combinations of OSs/browsers/versions.

Alternatives are, for example, user groups; this is, asking people from outside of the development team to test our website on certain OS platforms and browsers.

And, last but not least, we can make a very smart move by implementing automation tools. Automation tools, besides saving us a lot of time, can help us deal with repetitive tasks when the components we need reach a certain level of stability.

There are free, open source tools, and commercial tools. Practically all of them can help us automate most of our sets and suites, be them regression or end-to-end tests. We’ll expand on this topic later.

Before even intending to fix a newly found bug, what we need to do is to isolate it to the point we can pretty much exactly can identify where and how it happened.

We’ll begin by gathering all the information we can get from it in terms of user flow, app component, platform, device, browser version, etc., then try to reproduce it on several other configurations and combinations of those, for example, same browser/version, different OS; different browser/version, same OS, etc., and include all behavior details in the report which will be used by the developers to fix the very bug.

After we’ve done all that, and the bug is finally fixed, we’ll reiterate the whole process to make sure the fix actually solved the issue and is not causing breakages in other parts of the code.

Automation Tools

What are the Best Automation Testing Tools Available? This is quite a tricky question. The truth is, different tools available in the market have their own pros and cons, and, in most cases, the pricing is not transparent. We would recommend you to do some research and look at a few lists online, avoid companies that do not have any pricing available and go with companies that are not simply tools, but have real humans and outstanding customer success behind them to support your QA team.

In our case, we are a full suite for testing where you can:

- Build tests easily with a no-code, intuitive UI.

- Create Test Scenarios by interacting with the application as a user would.

- Run Test Scenarios on multiple browsers simultaneously.

- Automate faster, automate more.

We have a long-term roadmap traced in order to expand Autify’s capabilities and product lineup, which consists in three phases:

- Increase automation coverage. We will provide a product that can automate a greater proportion of test cases that are currently being tested manually.

- Increase overall test coverage. Since test coverage varies from person to person, we’ll provide a product that increases the test coverage along with the automation percentage.

- Eliminate test phase. Automate tests at the initial stage of development, driving it by testing. Once all tests pass, the software can be released without a separate test phase.

Autify is for you if:

- The development team wants to run tests first.

- The QA team tests multiple products across the board.

- The QA team is short staffed.

- Test automation seems too difficult.

- You have struggled with test automation before.

- You haven’t got around to maintaining test codes.

You can see our client’s success stories here: https://autify.com/why-autify

- Autify is positioned to become the leader in Automation Testing Tools.

- We got 10M in Series A in Oct 2021, and are growing super fast.

We have different pricing options available in our plans: https://autify.com/pricing

- Small (Free Trial). Offers 400~ test runs per month, 30 days of monthly test runs on 1 workspace.

- Advance. Offers 1000~ test runs per month, 90~ days of monthly test runs on 1~ workspace.

- Enterprise. Offers Custom test runs per month, custom days of monthly test runs and 2~ workspaces.

All plans invariably offer unlimited testing of apps and number of users.

We sincerely encourage you to request for our Free Trial and our Demo for both Web and Mobile products.