Humans often err on the side of caution. Testing is the classical way to do this in software engineering. Writing unit tests, performing manual QA testing, and conducting stress testing—everything ensures that the software works as intended under a variety of conditions.

Across industries, manual testing has been used as a way to simulate end-user experience and check for corner cases. Having an extra pair of eyes to test a product end-to-end has always added a lot of value. In this article, we’ll cover what manual testing entails, detail the types of manual testing, and explore nuances around how one can choose the right kind of manual testing.

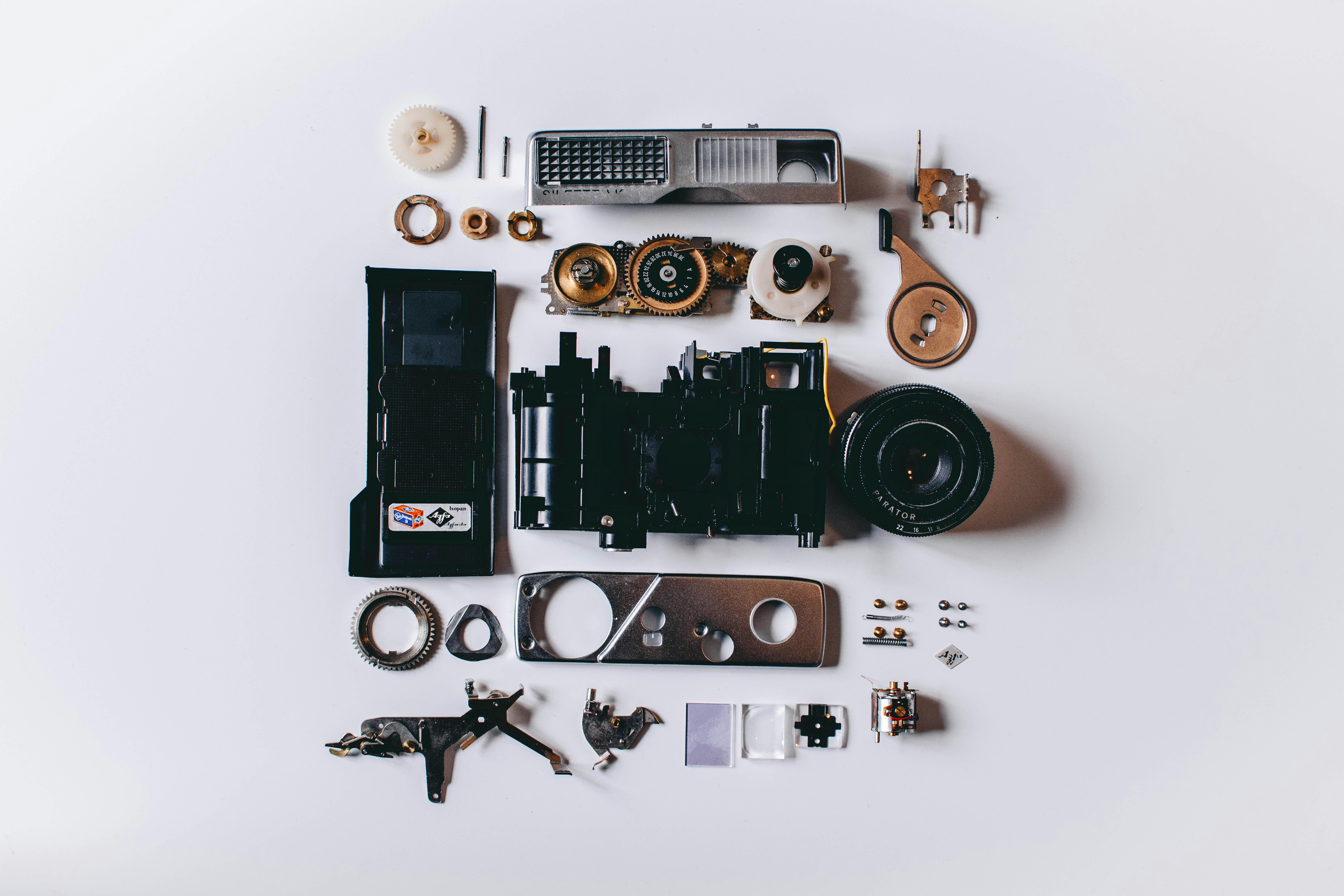

Every form of testing involves a good blend of manual and automated ways to get things done

What Is Manual Testing?

The simplest way to explain is by dissecting the words: Manual testing includes all parts of testing performed manually to find defects in software. It sounds rudimentary, doesn’t it? And we have so many tools available, why advocate for manual testing?

Manual testing seems to present a dichotomy.

I want to stress the fact that manual testing and automation work in conjunction with each other. Usually, in the thick of things, there isn’t enough time to automate all aspects of testing, nor can everything be tested via automation. A lot of usability issues involve putting on a product hat and testing the product intuitively, and hence manually.

Every form of testing involves a good blend of manual and automated ways to get things done. But let’s take a closer look in the next section at the types of manual testing to get a better grasp of the terminology.

Types of Manual Testing

Let’s do a deep dive into the different types of manual testing:

Unit Testing

This is the most common form of testing among developers. It’s largely automated, as developers write test code to check the functionality of each unit of their code. Some also follow the test-driven development (TDD) model and write unit tests to check the accuracy of their functions at the start.

Although typically automated, developers may also perform unit testing manually—such as using a REPL or console—especially during the early phases of development to quickly validate function logic before writing formal tests. It’s less about long-term coverage and more about fast feedback while the code is still in flux.

Black Box Testing

This form of testing is something we have all done at some point. Once we understand how the product is supposed to look and function, we can test its functionality by supplying various inputs and checking the expected outputs.

In this form of testing, a tester has no knowledge of the internal code structure. In layman’s terms, this would be playing around with the application to check things like all button clicks perform expected actions, etc. This can provide a good understanding of functionality as well as user experience quirks.

An interesting thing to note here is that black box testing can also give an inkling of what aspects of testing are repetitive and what the functionality is like, thus providing scope for automation.

Gray Box Testing

Gray box testing sits right in the middle of black box testing and white box testing. You're not completely blind to how the system works, but you're not knee-deep in the source code either. Maybe you know how the API is structured or you’ve seen the database schema. That context helps you write more focused tests, especially for areas like login sessions, data flows between components, or how different systems talk to each other.

You’re still testing from the perspective of a user, but now you can anticipate where things might break under the hood. It’s the kind of testing that helps catch subtle integration issues that black box testing might miss and that white box testing might over-engineer. It’s pragmatic, especially when full access to the code isn’t possible, but you need more than surface-level validation.

Automation might catch a missing button, but it won’t tell you that the spacing looks weird

User Interface (UI) Testing

UI testing is where you put yourself in the shoes of the user and just… use the product. You click buttons, resize windows, open modals, and check how everything looks and feels. Does the button work on hover? Does the layout break on mobile? Is the font readable against that background? These are the small things that can quietly ruin a user’s experience if not caught.

While a lot of this can be automated now, there's still real value in someone manually going through the interface and thinking like a user. Automation might catch a missing button, but it won’t tell you that the spacing looks weird or that a dropdown feels clunky. That’s where human intuition shines.

Exploratory Testing

Exploratory testing is all instinct and experience. There’s no checklist, no script. You just dive into the product and start using it like a real person would—clicking around, trying different flows, intentionally doing the “wrong” thing to see what breaks. That’s the point. You’re not just verifying what should happen, you’re actively trying to uncover what no one anticipated.

This kind of testing works best when you already know the product. You’ve been around it long enough to spot when something feels off, even if you can’t quite put your finger on it yet. Maybe a modal doesn’t load right on a certain screen size, or a flow silently fails when the data is just slightly out of bounds. These are things structured tests might miss.

Exploratory testing is especially useful when timelines are tight or the feature is still evolving. It gives teams a quick, realistic pulse on whether the product holds up under messy, real-world usage, not just ideal paths someone wrote in a test case a month ago.

Integration Testing

A software product is an amalgamation of different services and tools. One can be using a database, some form of messaging stream, integrations with other services to fetch or send data, and so on. In order to fully ascertain that an application works well, integration tests are an important tool. For example, after the login module is built, how does it interact with user sessions, analytics, or permissions?

It’s particularly helpful in finding issues related to data flow, mismatched APIs, or errors in inter-component communication. Manual integration testing is useful in complex systems where mocking all interactions may be too time-consuming initially. It helps teams visualize how parts of the product actually connect in practice.

Integration testing focuses on how individual parts talk to each other. You’ve got one service handling payments and another managing user profiles, but do they sync up properly? Are there silent failures that don’t show up in isolation? Running these checks manually, especially early on, helps pinpoint gaps in communication between systems before they snowball into larger bugs.

System Testing

This form of testing is really about zooming out and looking at the application as a whole. At this stage, individual components have been tested, but how do they behave when stitched together?

Manual system testing helps answer that by checking whether the software behaves correctly when the complete setup is in place. It’s holistic and gives testers a broad view of how well different modules cooperate under typical usage.

Acceptance Testing

Acceptance testing is that final moment where everyone asks, “Did we build the right thing?” It usually happens just before a feature is shipped. It’s the last checkpoint where product owners, QA leads, or even end-users walk through the features to make sure they match and are what was promised at the start of the sprint.

This isn’t about catching bugs or broken flows; it's about making sure the features work the way the business needs them to. Does the new onboarding flow guide users the right way? Does the dashboard update reflect the data it’s supposed to? Can a customer check out without getting stuck or confused?

Acceptance testing often focuses on these high-impact flows. It mirrors how people will actually use the product in the real world, not how it performs in a perfect test environment. And because it’s so tied to expectations, this kind of testing has less to do with tools and more to do with trust between designers, developers, product, and users.

When this testing passes, you’re not just saying, “It works.” You’re saying, “We’re ready.”

Sanity Testing and Smoke Testing

Sanity testing and smoke testing often get lumped together, and while they’re similar, they serve slightly different purposes. Sanity testing is a quick check to make sure a recent change hasn’t broken something obvious.

It’s informal, fast, and typically focused on the specific area of change. Smoke testing is broader and gives you a sense of whether the major functions of the application are intact after a new build. Both are incredibly useful during rapid development, especially when done manually by someone who knows what “normal” looks like.

Regression Testing

Regression testing is used to check the correctness of all areas impacted by a change. It’s not surface-level testing. Say you were building a fintech app and launching a new lending feature. As part of regression testing, one would test all other features in the app as well to ensure these new code changes do not have an impact on any of the existing flows.

Regression testing can be manual, depending on the time and bandwidth available, as automating all flows in an app can take time. Thus, it might be quicker to perform holistic testing of the app by a human.

How to Choose the Right Manual Testing Type

Honestly, it depends on what you’re working on and how confident you already are. If something’s just been built and you want to make sure it doesn’t completely fall over, you’d probably go for a smoke test. It’s quick. You check the basics. If it passes, great, now you can dig deeper.

If you’re wrapping up a feature and want to make sure it actually solves what it was meant to, acceptance testing is the go-to. It’s less about “does it work” and more about “does it do what we asked for.”

When you’re trying to get a feel for how something looks or behaves, especially within the UI, nothing beats clicking through it yourself. That’s where manual UI testing really helps. Sometimes it’s the layout that’s off, or a button that works fine technically but feels awkward to use.

And if you're just trying to break the thing? You explore. You poke around, follow weird paths, act like a user would, and so on. That’s exploratory testing. No script, just instinct.

Working with a lot of moving parts? Then integration or system testing is what you'll need to make sure everything plays nicely together.

Most of the time, you mix a few of these. It’s not about picking one. It’s about what helps you sleep better before shipping.

When Should You Use Manual Testing?

Manual testing is highly effective when the design is still in progress or can change further, or when a feature is still in flux and writing automation now would be a waste. Another very important reason for manual testing is tight deadlines or bandwidth crunch.

Software development isn’t linear—it’s dynamic and has many moving parts and changes. One cannot always write automated tests for all the parts in parallel during development. A lot of these tests are developed post-release. So what does one do until then?

They use manual testing to ascertain the quality of software being pushed out. And sometimes you just need someone to say, “Yep, this works.” Not every test is worth automating, especially if it’s a one-off bug fix or something that’s about to be rewritten.

Conclusion

As teams grow, manual testing alone becomes hard to scale. That’s where a platform like Autify Nexus comes in.

Nexus helps you build a repeatable, low-code automation layer over your manual workflows. It’s flexible enough to support exploratory QA, visual testing, and regression automation, all without needing to rebuild your entire process. You can integrate it into your existing CI/CD pipelines, write full-code when needed, and scale with confidence.

Whether you're just starting to define test cases or looking to upgrade your QA infrastructure, Autify Nexus helps modern teams bridge the gap between manual effort and automated speed.

.svg)